Pre-Defined Run IDs

Using LangChain, we offer the ability to pre-generate and define run IDs, before your code is invoked and the run ID is generated. With this functionality, you're able to access your run ID before initial generation, which can be useful for actions like sending feedback. The example below demonstrates this.

%pip install langchain-core

# Set environment variables.

import os

os.environ['LANGCHAIN_TRACING_V2'] = 'true'

os.environ['LANGCHAIN_PROJECT'] = ''

os.environ['LANGCHAIN_API_KEY'] = ''

from langchain_core.runnables import RunnableLambda

import uuid

lambda1 = RunnableLambda(lambda x: x + 1)

lambda2 = RunnableLambda(lambda x: x * 2)

pre_defined_run_id = uuid.uuid4()

# pass in run_id to the RunnableConfig dict

chain = (lambda1 | lambda2).with_config(run_id=pre_defined_run_id)

print(pre_defined_run_id)

chain.invoke(1)

5e53e7bc-03a7-4cba-822b-c5e75a9196cc

4

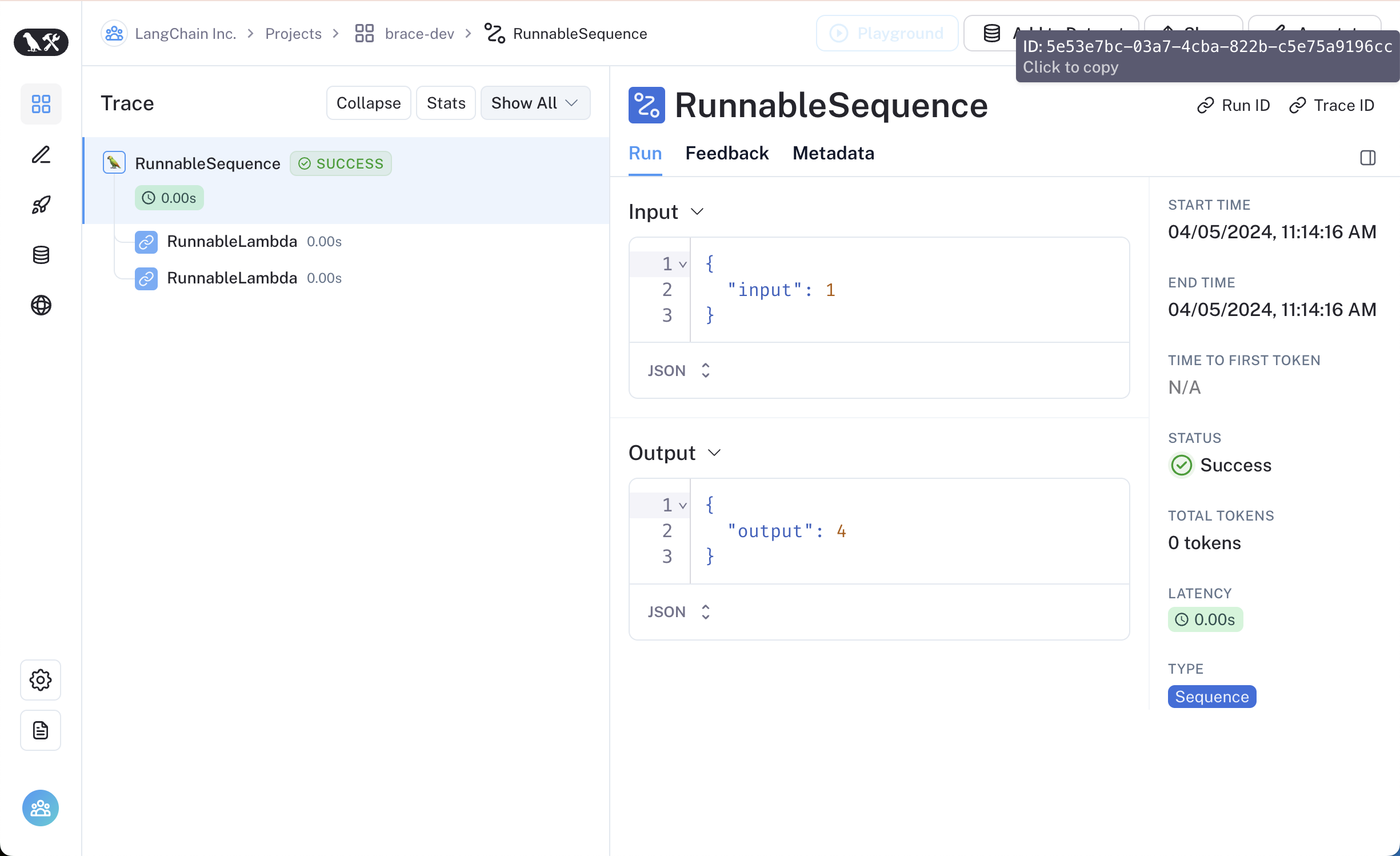

Great! Now, if we inspect the trace, and more specifically the trace's run_id, we cans see it matches the pre_defined_run_id we logged above!

Now, let's see how we can implement this for sending feedback on a run.

We'll use Anthropic in this example, but you can swap it with any LLM you'd like.

First, set the required environment variables:

os.environ['ANTHROPIC_API_KEY'] = 'sk-ant-...'

Install dependencies:

%pip install langchain-anthropic langsmith

from langchain_anthropic import ChatAnthropic

llm = ChatAnthropic(model_name="claude-3-haiku-20240307")

llm_feedback_uuid = uuid.uuid4()

res = llm.invoke("Did I implement this correctly?", config={"run_id": llm_feedback_uuid})

1aea0dde-c893-487a-bc02-17fe5d1e0b40

llm_feedback_uuid

UUID('18011750-e5c7-46b7-bc56-90c352fb87a0')

Without reading the response of the LLM, we can send feedback on the run.

from langsmith import Client

client = Client()

client.create_feedback(llm_feedback_uuid, "user_feedback", score=1)

Feedback(id=UUID('d9ed2d40-26aa-405c-9ee2-6b4c19486d8c'), created_at=datetime.datetime(2024, 4, 5, 19, 22, 7, 670095, tzinfo=datetime.timezone.utc), modified_at=datetime.datetime(2024, 4, 5, 19, 22, 7, 670098, tzinfo=datetime.timezone.utc), run_id=UUID('31336494-6a30-415a-ba26-8a343fdb333a'), key='user_feedback', score=1, value=None, comment=None, correction=None, feedback_source=FeedbackSourceBase(type='api', metadata={}), session_id=None)

Then, if we inspect the LangSmith run we'll see the feedback is linked to the run we just executed:

This can also be helpful for pre-signed feedback URLs. You would want to use these when you can't expose API keys or other secrets to the client, e.g. in a web application. Using a pre-determined run_id LangSmith has an endpoint create_presigned_feedback_token which will create a URL for sending feedback, without the use of secrets required.

Let's see how we can implement this:

# Define your UUID for the `run_id`

pre_signed_url_id = uuid.uuid4()

pre_signed_url = client.create_presigned_feedback_token(pre_signed_url_id, "user_feedback")

print(pre_signed_url)

id=UUID('9491a59d-e345-4713-813a-95dfbc39f3b5') url='https://api.smith.langchain.com/feedback/tokens/9491a59d-e345-4713-813a-95dfbc39f3b5' expires_at=datetime.datetime(2024, 4, 5, 22, 28, 8, 976569, tzinfo=datetime.timezone.utc)

Here, we can see that even though we haven't created a run yet, we're still able to generate the feedback URL.

Now, let's invoke our LLM so the run with that ID is created:

res = llm.invoke("Have you heard the news?! LangSmith offers pre-signed feedback URLs now!!", config={"run_id": pre_signed_url})

Then, once our run is created, we can use the feedback URL to send feedback:

import requests

url_with_score = f"{pre_signed_url.url}?score=1"

response = requests.get(url_with_score)

if response.status_code >= 200 and response.status_code < 300:

print("Feedback submitted successfully!")

else:

print("Feedback submission failed!")

Feedback submitted successfully!